In the intricate web of modern IT infrastructure, two powerful technologies are converging to redefine the landscape of application management: Virtual Machines (VMs) and Kubernetes. As companies across industries strive to optimize their operations and enhance scalability, the integration of these two solutions offers a promising path forward.

In this exploration, we delve into the synergy between VMs and Kubernetes, uncovering the best practices that pave the way for seamless integration. From resource allocation through namespaces to container image strategies that enhance security and efficiency, these practices hold the key to unlocking the full potential of modern application orchestration.

Join us as we embark on a journey through the intricacies of combining Virtual Machines and Kubernetes, a journey that promises to reshape how we manage applications in an increasingly dynamic and interconnected world.

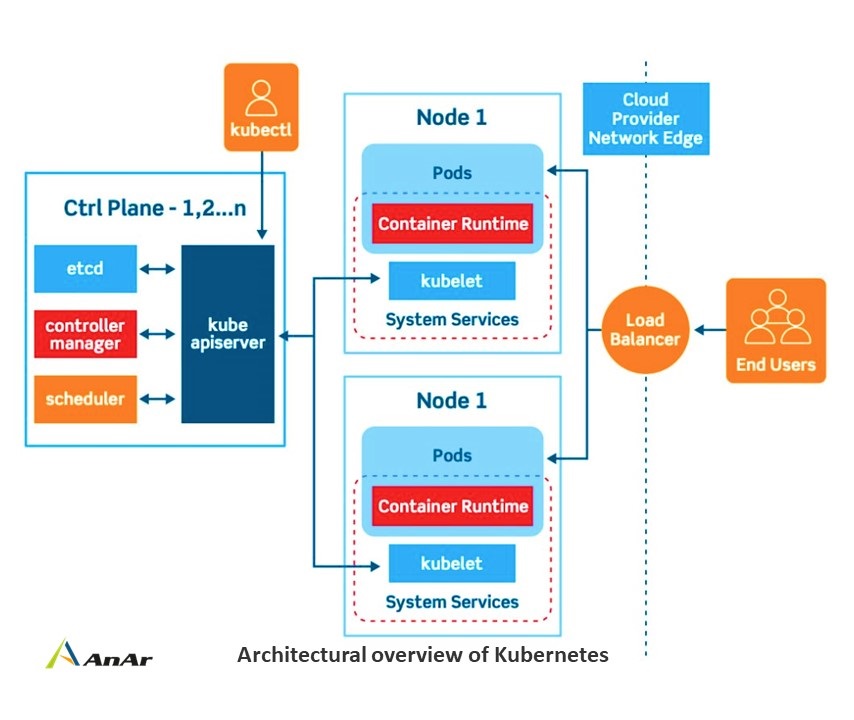

What is Kubernetes?

Kubernetes is an open-source platform designed for efficiently managing containerized workloads and services, often referred to as K8s. While containers and Virtual Machines share similarities, such as optimizing resource utilization, they diverge in their approach. Containers enable applications to share the underlying operating system, promoting lightweight and efficient deployment. However, given the diverse technological infrastructures of various companies, the quest for the optimal integration of Kubernetes becomes a crucial exploration.

Present data on Kubernetes:

As the technological landscape evolves, Kubernetes continues to stand out as a pivotal force in reshaping how we approach application management. This assertion is underscored by a series of compelling statistics that highlight the significance of Kubernetes in modern IT strategies:

- Enhanced Security with Enterprise Open Source: A resounding affirmation comes from nearly 87% of IT leaders who recognize the inherent security advantages of enterprise open-source solutions. This sentiment positions Kubernetes as a secure and reliable choice, aligning with the needs of organizations striving for fortified IT infrastructures.

- Containers in Production – An Overwhelming Majority: A pivotal survey conducted by the Cloud Native Computing Foundation in 2019 showcased the rapid adoption of containers. Remarkably, 84% of the respondents were actively running containers in production environments, illustrating the prominent role they play in modern application deployment.

- Anticipating Container Surge: The data echoes a unanimous sentiment: the trajectory of container usage is only moving upwards. A projected increase of 72% in the utilization of containers sets a clear trajectory, underscoring their growing importance in the realm of IT operations.

- Kubernetes: The Heart of Cloud-Native Strategies: At the core of cloud native application strategies stands Kubernetes, enjoying resounding support with an impressive 85% approval rating. This endorsement reinforces Kubernetes as a key enabler of transformative cloud-native approaches that empower businesses to scale, innovate, and adapt.

These statistics collectively paint a powerful portrait of Kubernetes as an instrumental tool in shaping the future of application management. As enterprises navigate the dynamic technological landscape, these insights provide a compelling rationale for embracing Kubernetes as a cornerstone of their IT strategies.

Kubernetes Architecrure Best practices:

Organize with namespaces:

It helps in organizing the resources and it’s possible to have several namespaces inside a single cluster. For a better guarantee against overwriting services, it is advisable to create multiple namespaces. It makes larger chunks manageable.

Creating namespaces will improve performance the API will have to deal with a smaller set of objects. Cross namespace communication is viable as the namespaces are hidden but not completely isolated. Depending on the need you can create a service with the same name in various namespaces and even access service in another namespace.

Resource Requests and Limits:

Check if you have enough resources to run your application on the node. Get better control over codes in development so that team does not create unnecessary replicas as it consumes resources. The limit has to be set higher than the request.

All the requests and limits on container basis but each container in the pod has its distinct limit and request. The pods are scheduled in a group thus you need to set the limits and requests for each container. This best practice lets us avoid the overconsumption of cluster resources by any single team or application.

Build Small Container Images:

Actually, it is quite easy to build huge containers, but they attract security issues. Smaller images help to create faster builds. It will consume lesser storage space and will be easy to pull images. Instead of base images use Alpine images, they are 10x smaller. Larger images are more prone to attack. Always add necessary libraries and packages to the application.

Labels and Tags:

Since labels have random key value pairs using labels can help you identify the containers. E.g., Phase:Dev, Role:BE. Selecting particular containers becomes simpler with the labels attached. It is not a good idea to use the latest or no tag. If there is no tag to the container it pulls the latest one from the repository that may not contain the changes you want to consider.

Storage & Networking:

The storage solutions should meet your criteria of application data availability and accumulation. Containers are immutable and trashes data created during their lifetime. Though K8s has a default temporary storage better go for services from cloud service providers for file, object, or data storage.

For stability and performance select services that integrate to support container storage interfaces (CSI). Volumes provide storage to the containers. It supports local storage devices and cloud based storage services. You may even build your storage plugins and provide access definitely through pods or via persistent volumes.

Persistent Volume (PV) a storage element in a cluster is dynamically defined by a storage class or manually defined by an administrator. PersinstentVolumeClaim (PVC) is a request raised by the user for the type of storage or data access frequency. A best practice is to include PVCs and never include them in container configuration as they will pair up to a specific volume. By default, encompass StorageClass or the PVCs that have no specified class will fail.

Highly automated networks provide greater flexibility and provide quality tools to the developers. For network security, the best practices are to choose a private cluster, minimize the exposure of the control plane. Control the access with authorized networks and allow control plane connectivity. Restrict the cluster traffic using appropriate network policies.

Monitoring:

In-depth analyzing of applications that run on containers is the real need of time. It’s not just about CPU or RAM utilization. Monitor API server (kube-apiserver), Scheduler (kube-scheduler), kube-controller-manager, kube-dns, cloud-controller-manager, kube-proxy. Monitoring helps to describe the threats within the cluster and their effect.

Various monitoring tools such as Datadog, Kubewatch, and Dynatrace save you from manually managing the alerts. Prometheus is free software that monitors applications and microservices running in containers. Jaeger traces and inspects transactions, addresses software issues in complex distributed systems. The tools you select should perform outstanding monitoring and tracing and offer real-time actions.

The AppDynamics monitors containerized applications running inside pods, it is an open-source data collector. To collect logs, deploy daemonset in every node of Kubernetes cluster. A daemonset is a workload object that ensures a specific pod runs on every node in the cluster or on certain subsets of nodes. Regularly audit the policy logs to identify threats, and control resource consumption. Fluentd an opensource tool is to maintain a centralized logging layer of your containers.

Container Internals:

As a best practice, mark your file system read-only. It is recommended to run one process per container. This will make health checkup efficient. For multiple processes dependent in each other run them in a pod.

DevOps Toolchain Integration:

Automation that increases predictability amongst pre-production and production environments. They integrate DevOps toolchains with Kubernetes-based container and bring consistency and improves production. It includes various tools for continuous delivery, source control, tracking issues and online editing. Select automation tools that fast-tracks the applications delivery pipeline. The Infrastructure and Operations tasks should be automated and integrated with K8s for better management.

Readiness and Liveness Probes:

Check Probes assists you to avoid pod failures and they are used so that Kubernetes knows that the nodes are healthy and processes run flawlessly. In production the readinessProbe checks if an application is ready to serve the traffic and respond. It is necessary before you allow traffic to a pod. LivenessProbe carries out the health check to ensure that the application is responsive and runs as intended.

Security:

Kubernetes security best practices are crucial as 7 out of 10 companies noticed misconfigurations in the environment. By default, the containers run as root but you can avoid and run not as root. The default requires more permissions than the workload requires this benefits the attackers if the container security is compromised. If you haven’t changed the user from the root, it could give hackers easy access to your host.

Avoid sharing the certificate authority key with Chief Technology Officer. It will increase the responsibility of the sysadmin. Encrypt and store all the credentials and secrets. Exposure of secrets can cost more than the efforts you save by keeping Kubernetes secrets in Infrastructure-as-code (IAC) repository to make the builds replicable.

Pod IPs and cluster IPs change so aren’t reliable, instead use DNS name for the services. Deploy role-based access control (RBAC) for resources related permissions. Assign role for a namespaced resource and cluster role for a non-namespaced resource.

Conclusion

You may have a custom approach for different applications to implement best practices to optimize containerized frameworks. E.g., To run databases on K8s, it is easier if apps and databases use the same tools.

Over 24,500 companies use Kubernetes, with a company size of 500 to 10,000 employees. The market share by industry is 32% by computer software and 13% by Information Technology and Services. Shopify, StackShare, Google, etc. are few prevalent companies using Kubernetes. Popular tools that integrate with K8s are Ansible, Docker, Microsoft Azure, Google Compute Engine, and many more.

What’s next

Take a look at our other blogs on Kubernetes?

Ready to Get Started?