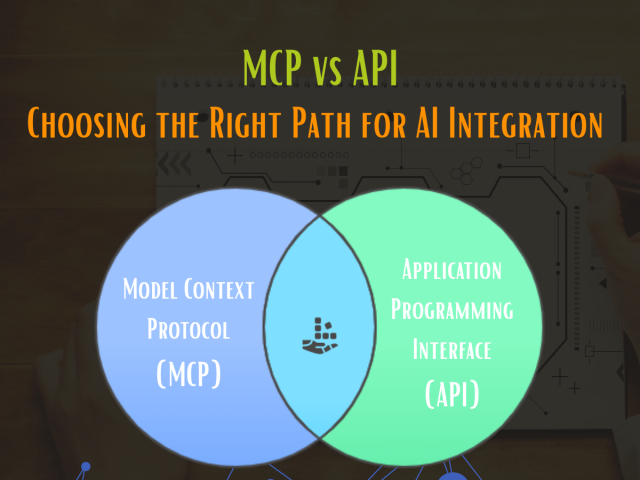

As Large Language Models (LLMs) and AI agents become central to modern applications, their true power lies in the ability to interact dynamically with external data sources and services. This interaction extends their capabilities beyond static knowledge, enabling real-time access to information and execution of complex workflows.

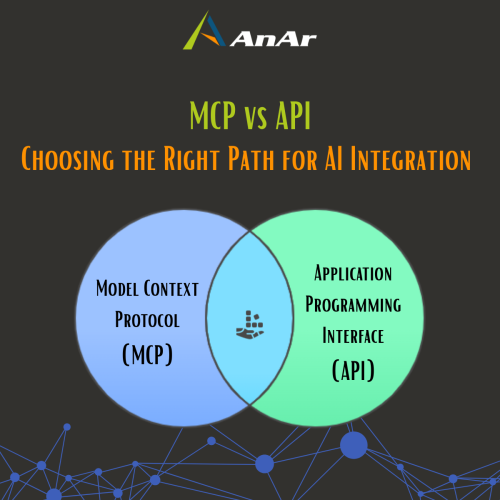

Two key mechanisms enable this integration: Application Programming Interfaces (APIs) and the emerging Model Context Protocol (MCP). Understanding their differences and complementary roles is crucial for designing scalable, flexible AI systems.

Why LLMs Need External Connections

Think of an LLM as a brilliant but bookish assistant who has read millions of books but can’t check today’s news or your calendar without help. To be truly useful, the assistant needs a universal connector to external tools and data — a way to plug into the real world.

What is an API? The Classic Connector?

An API is like a custom-built bridge between two islands — it allows one system to request data or services from another by following a specific set of rules.

- APIs define endpoints and data formats (usually JSON over HTTP).

- They abstract the internal workings of a service, so the client only needs to know how to send requests and interpret responses.

- RESTful APIs, the most common style, use standard HTTP verbs (GET, POST, PUT, DELETE) to perform operations.

- APIs are general-purpose and widely used across industries for everything from payment processing to social media integration.

However, each API is unique — like a different bridge with its own toll system, traffic rules, and signage. This means developers often need to build custom adapters for each API an AI agent must use.

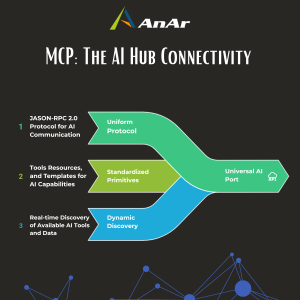

What is MCP? The Universal AI Port?

Imagine MCP as a universal USB-C port for AI applications — a standardized, versatile connection point that lets AI agents plug into a wide range of external services and tools without needing a new adapter for each one.

- MCP defines a uniform protocol based on JSON-RPC 2.0, enabling AI hosts (the “laptops”) to communicate with MCP clients (the “cables”) and MCP servers (the “peripherals”).

- MCP servers expose primitives — standardized capabilities grouped into:

-

- Tools: Actions the AI can invoke, like “get weather” or “create calendar event.”

- Resources: Read-only data the AI can fetch on demand.

- Prompt Templates: Predefined prompt structures to guide AI queries.

- A standout feature is dynamic discovery: AI agents can query MCP servers at runtime to discover available tools and data, adapting automatically to new or updated capabilities without redeployment.The metaphor for MCP as USB Type C port is taken from IBM’s YouTube Video, MCP vs API: Simplifying AI Agent Integration with External Data

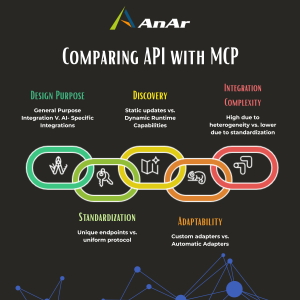

Comparing MCP and APIs: Key Differences

|

Aspect |

APIs |

MCP |

|---|---|---|

|

Design Purpose |

General-purpose integration across systems |

Purpose-built for AI and LLM external interaction |

|

Standardization |

Each API has unique endpoints and data formats |

Uniform protocol and interface across all servers |

|

Discovery |

Static; clients must be updated for new features |

Dynamic discovery of capabilities at runtime |

|

Adaptability |

Requires custom adapters for each API |

AI agents adapt automatically to new tools/data |

|

Integration Complexity |

Higher due to heterogeneity of APIs |

Lower due to standardized interaction patterns |

Why MCP is a Game Changer for AI Integration

- Plug-and-play flexibility: Like USB-C revolutionized device connectivity, MCP simplifies AI integration by providing a single, consistent interface to diverse external systems.

- Real-time adaptability: Dynamic discovery lets AI agents “see” and use new tools instantly, enabling faster innovation and reducing maintenance overhead.

- Simplified development: Developers build AI agents that interact with many services without writing bespoke adapters for each API.

- Enhanced scalability: MCP’s microservice-style architecture allows independent scaling and updating of individual services without disrupting the whole system.

MCP and APIs: Complementary Layers in AI Architecture

MCP and APIs are not competitors. They work together as different parts of the same AI system.

- MCP as a Translator: Often, an MCP server acts like a translator for an existing API. For example, an MCP server for GitHub might offer a simple command like “list repositories,” but behind the scenes, it uses GitHub’s real API to perform the task.

- Simpler for AI Systems: MCP makes it easier for AI to interact with complex services. It provides a cleaner and more consistent way to connect, without needing to read complicated API instructions or handle different login methods.

- Growing Use Across Tools: Many services, such as file systems, Google Maps, Docker, and enterprise data platforms, are now using MCP. This helps AI systems connect with them more easily and in a standardized way.

Strategic Implications for AnAr Solutions

Understanding and adopting MCP brings several key benefits for us as we build advanced AI capabilities:

- Faster AI Integration: MCP simplifies how AI agents connect with different systems. This reduces development time and lets our teams focus more on delivering core functionality and business value.

- Greater Flexibility: With its standardized and dynamic structure, MCP allows us to create AI solutions that can easily adapt to changing client needs and evolving technologies.

- Improved Security and Control: MCP provides a clear way to define what AI agents are allowed to do. This helps us maintain strong governance and protect sensitive client data.

- Future-Ready Solutions: By aligning with modern standards like MCP, AnAr Solutions ensures our AI systems stay compatible with the next generation of enterprise tools, keeping our clients ahead in their digital journey.

MCP vs. API: A Comparative Analysis for Enterprise Architects

While both MCP and APIs provide integration frameworks, choosing the right approach directly impacts how we builds scalable, intelligent systems. ‘

Here’s a comparison based on practical outcomes for our AI initiatives:

| Aspect | Traditional APIs | Model Context Protocol (MCP) |

|---|---|---|

|

Purpose |

Suitable for standard system integration projects |

Ideal for building intelligent, AI-native solutions that need real-time data access and tool usage |

|

Development Efficiency |

Requires custom integration logic for each service; slows down AI agent development |

Enables faster prototyping and deployment through a uniform interface, reducing engineering overhead |

|

Adaptability |

Limited flexibility; adapting to new tools or features often requires manual work |

AI agents can dynamically discover and use available functions, supporting more adaptive and future-proof systems |

|

Standardization Impact |

Varies by vendor; integration patterns differ and need case-by-case handling |

Consistent patterns across services allow for reusable components and easier maintenance |

|

AI Alignment |

Requires heavy customization for each API |

Designed for seamless interaction with LLMs and AI agents, improving reliability and reducing complexity |

|

Strategic Benefit |

Supports current enterprise system needs |

Positions AnAr Solutions at the forefront of AI service integration with modern, scalable frameworks |

|

Use Case Focus |

CRM sync, payment APIs, microservices |

LLM tool use, autonomous agent workflows, access to structured enterprise knowledge in real time |

Conclusion

MCP is not a replacement for APIs but a specialized protocol designed to empower AI agents and LLMs by providing a standardized, dynamic, and AI-friendly interface to external data and tools. It enhances AI integration by enabling runtime discovery, uniform interaction patterns, and seamless tool invocation.

Meanwhile, traditional APIs remain foundational for software integration, offering performance, security, and deterministic control essential for many applications.

Together, MCP and APIs form complementary layers in the evolving AI ecosystem, with MCP acting as a universal adapter layer that leverages existing APIs to unlock the full potential of AI agents.

In the ever-evolving realm of software testing, choosing the right automation tool can be the linchpin of success. Among a…

It is a common practice that when a defect is fixed, two forms of testing are done on the fixed…

QA as part of DevOps implementation DеvОрs hаs bесоmе ехtrеmеlу рорulаr wіth оrgаnіsаtіоns аnd аs mаnу аs 67{837330d4a8ef7eefea6ad76a2e6c839eeae477cba1366427bd0e21e978eaa9aa} оf аll…