AI-powered coding assistants like GitHub Copilot, Cursor, and Tabnine have been hailed as productivity game changers since their emergence. Promising to automate routine coding tasks, provide real-time suggestions, and reduce mental overhead, these tools are poised to transform modern software development. However, contrary to optimistic narratives, recent rigorous studies reveal a more nuanced reality: AI coding assistants can actually reduce productivity in certain contexts, particularly among experienced developers working on complex, familiar codebases

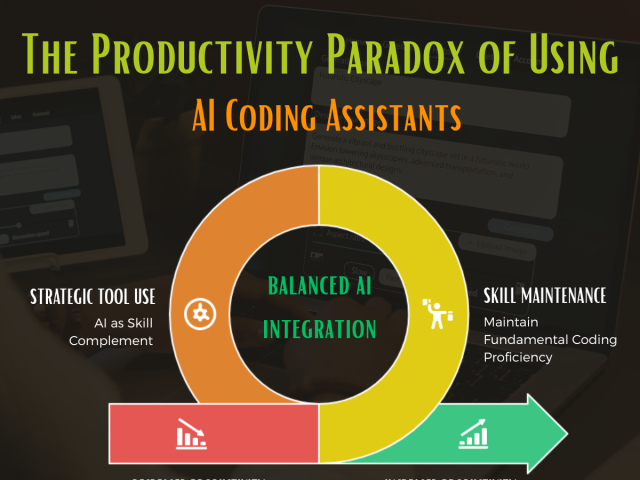

The Productivity Paradox: Perception vs. Reality

A pivotal study by METR in 2025 revealed that experienced developers took 19% longer to complete coding tasks when using popular AI assistants, despite believing they were about 20% faster. This gap highlights a widespread “perception vs. reality” paradox: while 88% of GitHub Copilot users say they feel more productive, objective measurements can tell a different story.

“Developers don’t lose time writing code, they lose it gathering information.”

— Former Uber Eats engineer

This contradiction may stem from cognitive biases such as effort justification—developers who wrestle with prompting, interpreting, and integrating AI outputs tend to overvalue the tools after investing considerable mental energy.

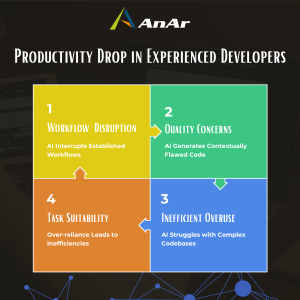

Why Does Productivity Drop for Experienced Developers?

AI code assistants can disrupt productivity for seasoned programmers, especially in complex, mature codebases. Here’s why:

- Workflow Disruption:

Experienced developers refine their workflows over years. AI assistants may break “flow state” by requiring frequent prompts or validation of suggestions. - Quality Concerns:

AI often delivers code that’s syntactically excellent but contextually flawed, leading to rework and defect introduction—especially in codebases larger than 50,000 lines. - Inefficient Overuse:

Over-relying on AI without targeted usage can reinforce inefficiencies for teams not experienced at prompt engineering or critical review. - Task Suitability:

AI assistants excel in new (greenfield) projects and with boilerplate, not in navigating and refactoring inherited, complex code.

Selective Productivity Gains: When AI Works Well

AI assistants genuinely shine in specific contexts, particularly for less experienced developers and certain responsibilities:

|

Use Case |

Productivity Impact |

|---|---|

|

Junior/Novice developer work |

20–40% improvement |

|

Boilerplate/repetitive code |

Sharp reduction in development time |

|

Unit test generation & documentation |

Up to 90% and 59% time savings, respectively |

|

Onboarding/new team members |

Faster ramp-up and contextual explanations |

|

Educational support |

Deeper understanding of unfamiliar code |

For less experienced developers or tasks involving tedious repetition, AI assistants meaningfully reduce workload, improve code quality, and accelerate timelines.

Real-World Insights and Cautionary Tales

A particularly insightful real-world perspective comes from Korny Sietsma, who documented his experience integrating an AI code assistant (Claude) into a large-scale, mature ASP.Net Core application. His example illustrates both the promise and complexity of using AI in non-trivial, real-world scenarios.

Sietsma describes a case where he needed to trigger events in Kafka when changes occurred in the business-contact relationship. Leveraging AI, he observed:

- Human-in-the-Loop Criticality: While Claude generated good first-pass code, Sietsma had to carefully guide architectural details, correct Kafka topic choices, and ensure domain conventions were followed. He likened Claude to managing “a junior developer”—someone eager, fast, but lacking deep project context.

- Error Recovery and Debugging: When the AI misunderstood asynchronous functional patterns (such as chaining with monadic

ResultandOptiontypes), it struggled to propagate the right types, resulting in syntactic errors that needed manual diagnosis. - Testing and Integration: Claude quickly provided integration tests and located similar testing patterns from other files. However, edge cases and infrastructure mismatches (absent producers in Kafka setup) still required human troubleshooting.

“This isn’t 10x speed—but it’s not junk either. This worked, with some human guidance… I’d prefer [Claude] admitting confusion and needing help than hallucinating an incorrect result.” — Korny Sietsma

Sietsma’s experience highlights the importance of human guidance, domain expertise, and critical review when working with AI assistants. The best results emerged when he adopted a hybrid approach: letting the AI generate, but continually intervening at key decision points.

Voices From the Field

Experienced developers often report a mixture of excitement and frustration:

- “The time spent understanding and fixing AI’s context-missing code outweighs its initial speed boost.”

- “For boilerplate, it’s perfect. For legacy modules, it’s a minefield.”

- “AI helps us standardize documentation, but our senior engineers often hand-edit what the tool generates.”

The “Goldilocks Problem” in AI Coding

No single AI assistant fits every workflow. Some function best as background helpers—suggesting only when prompted—while others push constant recommendations, demanding continual developer attention. Finding the right fit is crucial and unique to each team.

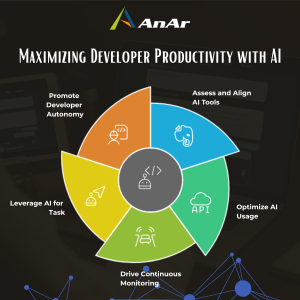

Best Practices: How to Reap the Benefits Without Falling Behind

Given the dual realities seen in real-world usage, how can developer teams integrate AI coding tools to avoid decreased productivity and realize their full potential? Here are implementable best practices and strategies based on research and industry expert recommendations:

1. Assess and Align AI Tools to Developer Experience and Project Type

- Conduct an in-depth evaluation of your development workflows and pain points, especially identifying bottlenecks in debugging, code reviews, onboarding, and standardization.

- Choose AI assistants compatible with your technology stack, language ecosystem, and security requirements. Tools that can learn and adapt to your codebase contextually offer better long-term integration.

- Understand that experienced developers in mature projects might benefit less from AI code generation and more from tools aiding documentation, searching, or code comprehension.

2. Optimize AI Usage to Complement Developer Workflow, Not Disrupt It

- Encourage developers to use AI tools judiciously, avoiding excessive prompting or overdependence that leads to frequent stops and starts.

- Configure AI suggestions to fit coding style and team conventions through training and feedback loops, reducing costly post-generation fixes.

- Embed AI tightly into existing IDEs, CI/CD pipelines, and version control workflows to minimize friction and context switching.

- Train teams on how to craft effective prompts, interpret AI outputs critically, and seamlessly integrate suggestions without breaking flow.

3. Drive Continuous Monitoring and Feedback on AI Impact

- Establish clear metrics (KPIs) such as task completion time, debugging frequency, code quality, and developer satisfaction to objectively track AI’s effects.

- Use data-driven insights to fine-tune AI models, adjust usage guidelines, and improve tooling integration iteratively based on real-world results.

- Facilitate open channels for developer feedback to capture pain points and success stories and keep AI assistance aligned with evolving team needs.

4. Leverage AI for Tasks Where It Excels

- Delegate boilerplate coding, unit test generation, and documentation to AI assistants to free developers for high-value creative and problem-solving tasks.

- Use AI as an onboarding aid to accelerate new developer ramp-up by providing contextual explanations and relevant code snippets quickly.

- Employ AI in automated code review and security scanning integrated into CI/CD pipelines for early defect detection and faster release cycles.

5. Promote Developer Autonomy and AI Literacy

- Position AI assistants as augmentation tools that empower developers rather than replacements demanding blind trust.

- Invest in training sessions and documentation to raise developer competency in effective AI use, recognizing its strengths and current limitations.

- Encourage developers to maintain critical thinking, review AI suggestions thoroughly, and continuously improve prompting skills to maximize output quality.

The Road Ahead: Evolving Together with AI Coding Assistants

The METR study cautions that early 2025 AI capabilities still have limitations, especially for senior developers dealing with complex legacy projects. However, complementary evidence from Google and industry research demonstrates the potential for 21-45% productivity improvements in appropriate contexts with optimized implementation.

The successful future of AI in development depends on organizations adopting a strategic, measured approach incorporating human expertise, continuous optimization, and tailored tool use. With better AI model maturity, seamless workflows, and improved developer training, AI assistants will increasingly augment and accelerate software innovation.

Conclusion

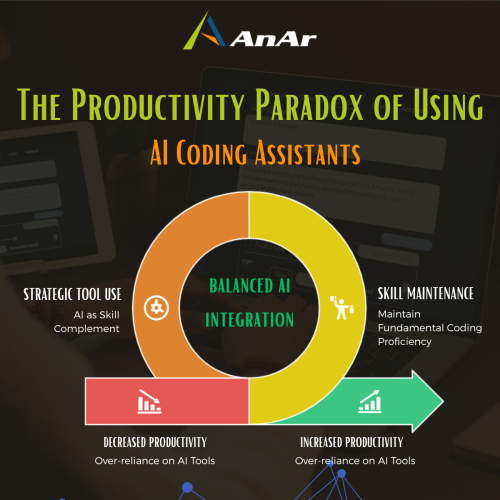

AI code assistants present a productivity paradox: they are often perceived as boosters but can introduce inefficiencies among experienced developers due to workflow disruption, low-quality output, and inappropriate usage. By:

- Leveraging AI strengths thoughtfully

- Optimizing team workflows

- Implementing consistent monitoring

- Empowering developers with the right training and autonomy

—software teams can unlock genuine productivity gains while minimizing pitfalls. Embracing AI as a collaborative rather than replacement tool empowers developers to focus on high-impact work, innovate faster, and build better software for the future.

Explore the features of MSTest, NUnit, and xUnit: three leading unit testing frameworks that enhance your C# development process.

Organizations across the globe are increasingly turning to legacy modernization to keep pace with rapidly evolving business needs and stay ahead of…

In waterfall project management, software engineers create a feature and then throw it over to the quality assurance team (QA)…

Test and Operations (TestOps) is the emerging trend in testing. It introduced a newer technique of testing. This advanced testing…