In today’s digital world, data is generated at an unprecedented pace across various platforms and storage systems. However, much of this raw data remains underutilized due to its unstructured nature and lack of context. Businesses need a powerful solution to organize, refine, and analyze this data for meaningful insights. That’s where Microsoft Azure Data Factory comes in—a fully managed cloud service that simplifies the process of transforming, integrating, and optimizing data pipelines. Whether you’re managing hybrid ETL processes or tackling complex data integration projects, Azure Data Factory equips your business to unlock the full potential of big data, fueling smarter decisions, innovation, and competitive growth.

In this blog, we’ll explore the key components and practical applications of Azure Data Factory, demonstrating how this versatile tool helps businesses orchestrate data transformation and extract valuable insights.

So, what is Azure Data Factory MEANS?

Azure Data Factory is a fully managed cloud service built to handle complex hybrid ETL (Extract-Transform-Load), ELT (Extract-Load-Transform), and data integration projects. It automates and manages the movement and transformation of data across various storage systems and compute resources. With Azure Data Factory, users can design and schedule data-driven workflows—referred to as pipelines—to extract data from different sources and create sophisticated ETL processes. These pipelines can leverage visual data flows or use services like Azure HDInsight, Azure Databricks, Azure Synapse Analytics, and Azure SQL Database for processing.

The core objective of Data Factory is to gather data from multiple sources, transform it into a usable format, and streamline processing for end users. Since data sources often come in diverse formats and may contain irrelevant information, Azure Data Factory provides the tools to transform and clean the data, ensuring compatibility with other services within a data warehouse solution.

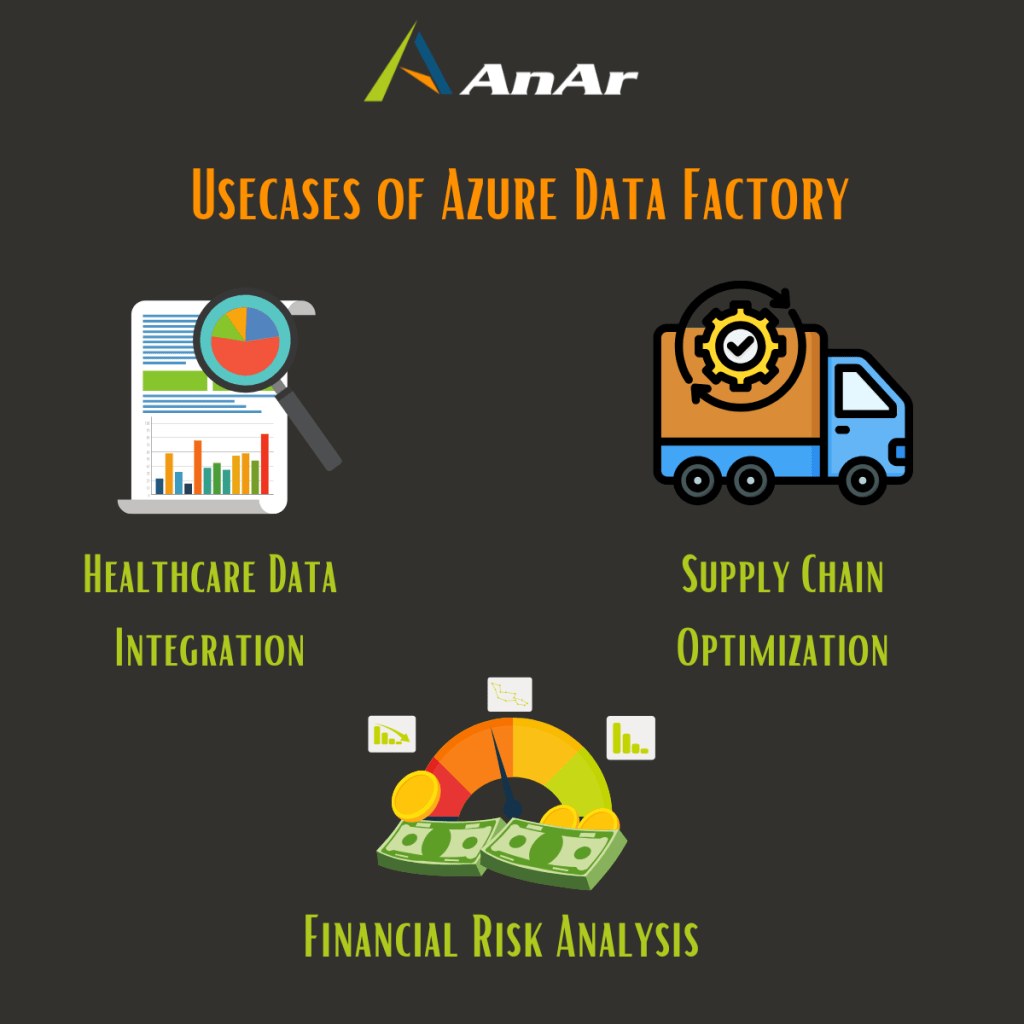

Use cases

For example, imagine a healthcare provider managing data from multiple sources like patient records, hospital equipment, and medical devices. The organization aims to analyze this data to enhance patient outcomes, identify cost-saving opportunities, and improve overall healthcare services.

To achieve this, they need to integrate the data from these diverse sources into a unified cloud-based platform, such as Azure Data Lake Storage. The data must be cleaned, transformed, and enriched with additional reference information, such as healthcare guidelines or medical research.

The organization plans to use Azure Data Factory to orchestrate the entire data movement and transformation process. Pipelines will be created to ingest data from various sources, transform it using services like Azure Databricks or Azure HDInsight, and store it in Azure Data Lake Storage for further use.

In addition, Azure Machine Learning will be employed to develop predictive models, enhancing the organization’s ability to analyze the data and improve decision-making. Finally, Azure Synapse Analytics will be used to generate interactive dashboards and reports for stakeholders.

By leveraging Azure Data Factory, the healthcare provider can streamline its data integration and transformation efforts, reduce operational costs, and ultimately deliver better patient care.

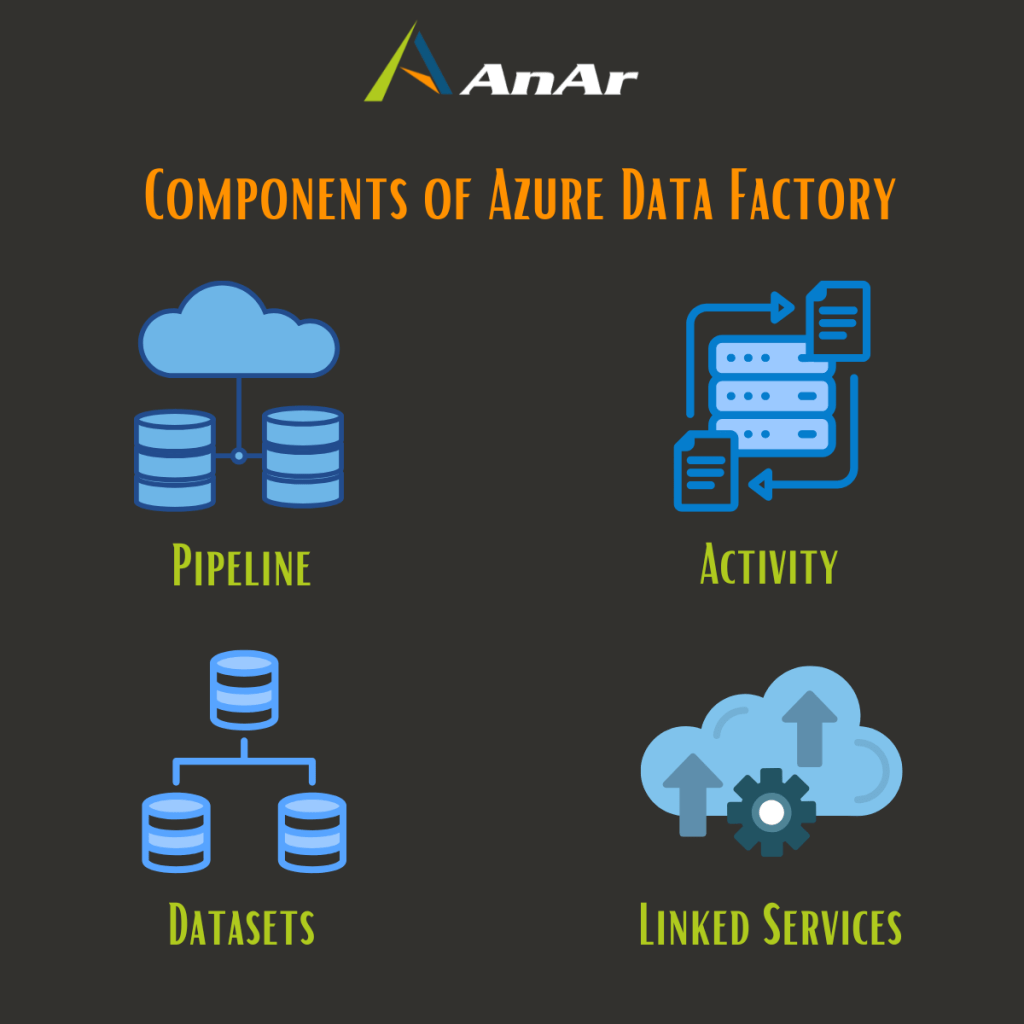

What are the main components of Azure Data Factory?

A subscription to Azure could contain single or multiple instances of Azure Data Factory, which is comprised of the following essential elements:

Pipeline

- In Azure Data Factory, a pipeline is a logical grouping of activities that perform a specific unit of work. It can be thought of as a workflow that defines the sequence of operations required to complete a task. A pipeline can include various activities that perform different types of data integration and transformation operations.

- An Azure Data Factory instance can have one or more pipelines, depending on the complexity of the data integration and transformation tasks that need to be performed.

- A pipeline in can be run manually or automatically using a trigger. Triggers allow you to execute pipelines on a schedule or based on an event, such as the arrival of new data in a data store.

- Activities in an Azure Data Factory pipeline can be chained together to operate sequentially or independently in parallel. Chaining activities together can create a workflow that performs a sequence of operations on the data, while running activities independently in parallel can optimize performance and efficiency.

- An example of an activity in a pipeline could be a data transformation activity, which performs operations on the data to prepare it for analysis or consumption. Data transformation activities can include various operations such as filtering, sorting, aggregating, or joining data from multiple sources. By combining multiple data transformation activities in a pipeline, you can create complex workflows that perform sophisticated data integration and transformation tasks. For example, you could use data transformation activities to cleanse and standardize customer data from multiple sources before loading data into a warehouse for analysis.

Activity

- Activities in a pipeline: In Azure Data Factory, activities represent an individual processing step or task in a pipeline. They can include data movement activities, data transformation activities, and control activities that define actions to perform on your data.

- Activities in a pipeline define actions to perform on your data, such as copying, transforming, or filtering the data. Each activity in a pipeline consumes and/or produces datasets, which are the inputs and outputs of the activity.

- Activities in Azure Data Factory can support data movement, data transformation, and control tasks. Data movement activities are used to copy data from one data store to another, while data transformation activities perform operations on the data, such as filtering, sorting, or aggregating it. Control activities are used to control the flow of data between activities and perform conditional logic or error handling.

- Activities in a pipeline can be executed in both a sequential and parallel manner. Chaining activities together in a sequence can create a workflow that performs a series of operations on the data, while executing activities in parallel can optimize performance and efficiency.

- Activities can either control the flow inside a pipeline or perform external tasks using services outside of Data Factory. For example, you might use a web activity to call a REST API or a stored procedure activity to execute a stored procedure in a SQL database.

- An example of an activity in Azure Data Factory could be a copy activity, which copies data from one store to another data store. The copy activity can include various settings to specify the source and destination data stores, the data to be copied, and any transformations to be applied during the copy operation. By combining multiple activities in a pipeline, you can create complex data integration and transformation workflows that meet your specific business needs.

Type of Activities

- Data Movement: Copies data from one store to another.

- Data Transformation: Uses various services like HDInsight (Hive, Hadoop, Spark), Azure Functions, Azure Batch, Machine Learning, and Data Lake Analytics.

- Data Control: Performs actions like invoking another pipeline, running SSIS packages, and using control flow activities like for each, set, until, and wait.

Datasets

- A dataset is a logical representation of the data that you want to work within your Azure Data Factory pipelines. A dataset defines the schema and location of the data, as well as the format of the data. A dataset can be thought of as a pointer to the data that you want to use in your pipeline.

- A dataset represents a particular data structure within a data store. For example, if you have a SQL Server database, you might have multiple datasets that each represent a table or view within that database.

- A dataset is used as the input or output of an activity in your pipeline. For example, if you have a pipeline that copies data from one storage account to another, you would define two datasets: one for the source data and one for the destination data.

- To work with data in a particular data store, you need to create a linked service that defines the connection details to that data store. In the case of Azure Blob storage, you would create a Blob Storage linked service that specifies the account name, access key, and other details of the storage account.

- Once you have defined a linked service, you can create a dataset that references the data that you want to use in your pipeline. In the case of Azure Blob storage, you would create a dataset that specifies the container and blob name, as well as the format of the data (such as Parquet, JSON, or delimited text).

- In addition to working with Blob storage, Azure Data Factory supports many other data stores, including SQL Database and Tables. For SQL Database, you would create a dataset that specifies the server’s name, database name, and table name, as well as any relevant authentication details. For Tables, you would create a dataset that specifies the storage account name and table name.

Linked Services

- Linked service is a configuration entity in Azure Data Factory.

- It defines a connection to the data source and specifies where to find the data.

- Linked services are similar to connection strings that define connection information for Data Factory to connect to external resources.

- The information in a linked service varies based on the resource being connected to

- A linked service can define a target data store or a compute service.

- Examples of linked services include Azure Blob Storage linked service for connecting a storage account to Data Factory and Azure SQL Database linked service for connecting to a SQL database.

Linked services serve two main purposes in Azure Data Factory:

- To define the connection to a data store, such as SQL Server database, PostgreSQL database, Azure Blob storage, and many others. This allows Data Factory to read from or write to that data store.

- To define a compute resource that can run an activity. For example, the HDInsight Hive activity requires an HDInsight Hadoop cluster as the compute resource to execute the activity. This enables Data Factory to leverage the power of various compute resources to perform data processing tasks.

Integration Runtime (IR)

- Integration Runtime (IR) acts as a bridge between Activities and Linked Services in Azure Data Factory.

- IR provides a computing environment where activities can either run on or get dispatched from.

- IR is responsible for moving data between data stores and the Data Factory environment.

- IR can be installed on an Azure VM, self-hosted machine, or run as a managed service.

- IR is used to provide connectivity to on-premises and cloud-based data stores and compute resources.

- IR can be configured to manage data flow traffic and data encryption in transit.

Integration Runtime (IR) has three types

- The first type is Azure IR which is a fully managed, serverless computing service provided by Azure. It enables data movement and transformation within the cloud data stores.

- The second type is Self-hosted IR which facilitates the movement of data between cloud data stores and a data store that is hosted on a private network.

- The third type is Azure-SSIS IR which is necessary for executing SSIS packages natively.

Triggers

The process of initiating the execution of a pipeline is performed by triggers. Triggers have the responsibility of determining the exact time when a pipeline should be executed. They can be set to execute pipelines based on a wall-clock schedule, at a regular interval, or when a specific event takes place. Essentially, triggers serve as the mechanism that decides when a pipeline should be executed, and they are the building blocks that represent the unit of processing required to start a pipeline run.

Trigger types include:

- Schedule: triggers pipeline execution at a specific time and frequency, such as every Sunday at 2:00 AM.

- Tumbling Window: triggers pipeline execution at periodic intervals, such as every two hours.

- Storage Events: triggers pipeline execution in response to a storage event, such as a new file being added to Azure Blob Storage.

- Custom Events: triggers pipeline execution in response to a custom event, such as an Event Grid event.

Data Flows

These capabilities allow data engineers to design data transformation workflows visually, without needing to write complex code—only data expressions are required. Using a visual editor, transformations can be carried out in multiple steps within the pipeline. These activities are executed in Azure Data Factory pipelines on a managed Spark cluster in Azure Databricks, providing scalable processing power. Azure Data Factory manages all aspects of data flow execution and code translation, simplifying the process of handling large volumes of data with efficiency and ease.

Mapping data flows

The mapping data flow feature allows users to create and manage graphical representations of data transformation logic, making it simple to transform data of any size. It enables the development of a library of reusable transformation routines, which can be executed at scale directly from Azure Data Factory pipelines. This feature streamlines complex data workflows and ensures consistency across various data transformation processes.

Control flow

Control flow is a method for organizing and managing the execution of activities within a pipeline. It enables activities to be connected in a defined sequence, allowing for the use of pipeline-level parameters and the passing of arguments when invoking the pipeline, whether on-demand or via a trigger. Control flow also supports custom state-passing and looping constructs, such as For-each iterators, which allow activities to be executed repeatedly, facilitating the automation of complex, iterative processes within Azure Data Factory pipelines.

Parameters

Parameters are read-only configurations that consist of key-value pairs. They are defined in a pipeline and passed during execution from the run context, which is created by a trigger or manually executed pipeline. Activities within the pipeline use the parameter values.

Datasets are strongly typed and reusable entities that can be referenced by activities. They can consume the properties defined in the dataset definition.

Linked services are also strongly typed and reusable entities. They contain the connection information for a data store or a computing environment.

Variables

Variables are a type of data structure that allows data engineers to temporarily store values within pipelines. They can also be used in combination with parameters to enable the transfer of values between pipelines, data flows, and other activities.

How does Azure Data Factory work?

Azure Data Factory provides a comprehensive, end-to-end platform for data engineers, offering an integrated system to manage data workflows efficiently.

Connect and Collect:

Organizations need to gather various types of data from a range of sources—whether on-premises or cloud-based, structured, semi-structured, or unstructured. This data arrives at different intervals and velocities, making it essential to have a system that can seamlessly integrate all of it. The first step in building a robust information pipeline is connecting to all necessary data sources and moving the data to a centralized location for processing.

Azure Data Factory offers a managed service to integrate and transfer data across various sources—such as SaaS applications, databases, file shares, and FTP web services—to centralized processing locations. This service eliminates the need for costly custom solutions and provides enterprise-level monitoring, alerts, and controls. The Copy Activity within a pipeline can move data from diverse sources to a central cloud repository, where it can then be analyzed and transformed using tools like Azure Data Lake Analytics or an Azure HDInsight Hadoop cluster.

Transform and Enrich:

Once the data is centralized, Azure Data Factory’s mapping data flows allow for processing and transformation without requiring detailed knowledge of Spark clusters or programming. For those who prefer manual coding, ADF also supports external activities, enabling transformations to be executed using compute services like HDInsight Hadoop, Spark, Data Lake Analytics, or even Machine Learning.

Publish:

Azure Data Factory supports continuous integration and continuous delivery (CI/CD) of data pipelines through platforms like Azure DevOps and GitHub, facilitating incremental development and deployment of ETL processes. Once data is transformed, it can be loaded into analytics engines such as Azure Data Warehouse, Azure SQL Database, or Azure Cosmos DB, making it accessible to business users via BI tools.

Monitor:

After deploying data integration pipelines, monitoring is crucial for ensuring successful execution and quick error detection. Azure Data Factory provides several built-in monitoring tools, including Azure Monitor, PowerShell, APIs, health panels on the Azure portal, and Azure Monitor logs, enabling real-time tracking and management of pipeline activities.

Final Wrap-up

Azure Data Factory serves as a pivotal tool for businesses aiming to leverage data efficiently. With its advanced data integration, transformation capabilities, user-friendly interface, and seamless compatibility across cloud and on-premises systems, it offers a complete platform to streamline data movement and uncover valuable insights. Whether orchestrating complex ETL workflows or delivering real-time analytics, Azure Data Factory scales effortlessly to meet the demands of your growing data needs.

At AnAr Solutions, our team of skilled data engineers can help you unlock the full potential of Azure Data Factory. From designing optimized data architectures to enhancing workflows and providing comprehensive monitoring, we ensure your data pipelines are secure, efficient, and capable of transforming raw data into actionable insights—empowering smarter, data-driven business decisions.