Serverless architecture nowadays is a hot topic in the world of software product development. Cloud vendors like — AWS, Microsoft Azure, and Google Cloud are investing a lot in serverless. Apart from this, we can find many books, conferences, events, projects, and software vendors dedicated to this innovation in cloud computing.

This blog post will provide examples of serverless architecture and its components. We will then weigh the advantages and disadvantages.

What does Serverless architecture stands for?

As with many other software developments, there is no single correct definition of Serverless. To begin, it addresses two distinct yet overlapping areas:

Backend as a Service (BaaS)

These services can also be described as Mobile Back-end as a Service. These are rich client applications like single-page mobile or web applications and use cloud hosted services to manage service side logic.

These services come in the vast ecosystem of cloud associable authentication services (AWS Cognito), databases (Parse, Firebase), and so on.

Functions as a Service (FaaS)

Serverless architecture can also refer to applications in which server side logic is still written by the application developer. But unlike traditional architectures, it is executed in event triggered logical containers that lasts only for one invocation and is managed by a third-party vendor.

One of the most popular implementations of the function-as-a-service platform is AWS Lambda.

BaaS and FaaS are operationally comparable (for example, no resource management) and are frequently used in tandem. All the major cloud providers have “Serverless Architecture Portfolios” that include both BaaS and FaaS products.

Now let’s understand the structural difference between traditional applications and apps built using serverless architecture through an example.

Understanding serverless technology using an application

Traditional Architecture

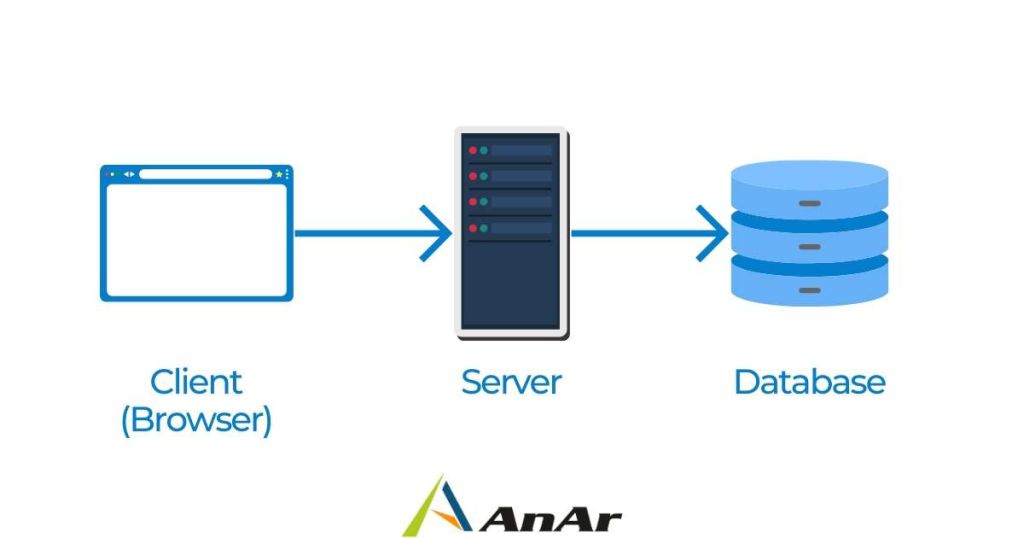

Here, we are taking an e-commerce application as an example, as it fits perfectly in a 3-tier client-oriented system with server-side logic.

May be an online groceries store?

The architecture will often look like the design below. Let’s assume it is written in HTML + Javascript on the client side and with Java or Javascript on the server side.

In traditional architecture, the client remains comparatively unintelligent, with the server application handling much of the system logic (authentication, page navigation, searching, and transactions).

Serverless Architecture

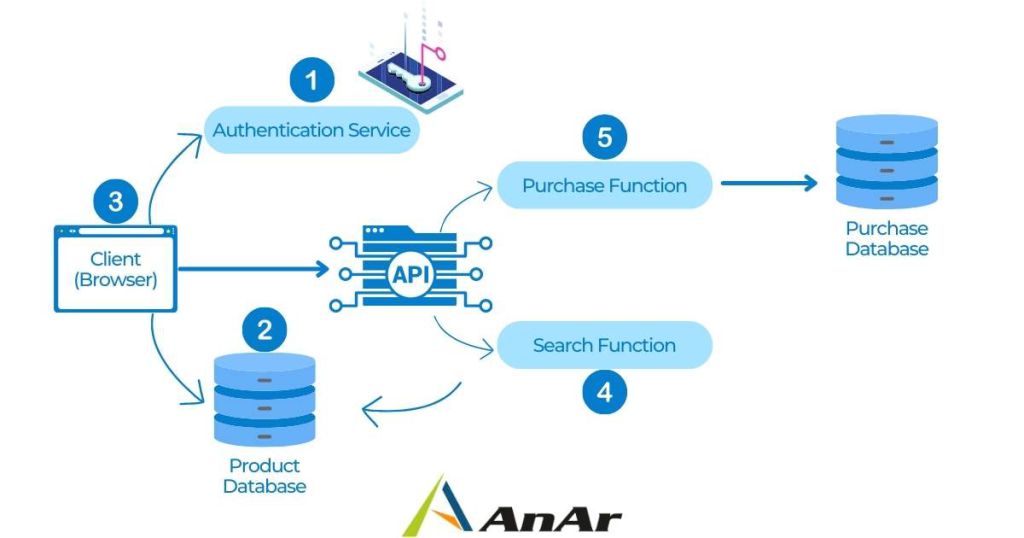

Though this is a greatly simplified view, we can see several significant differences.

Understanding the changes

- We are replacing the application logic on the server side in traditional applications with a third-party (Baas) — Backend-as-a-Service. (Example — Auth0 etc.)

- Using another backend-as-a service, we give the client direct access to a subset of our database (Product Listing Database). A third party can host this database. (Eg. Google Firebase)

- From the initial two implementations of Baas services, we can imply that now some of the logic on the server side has moved to the client side.

- The client is well on its way to developing into a single page application through these modifications, which include keeping track of user sessions, comprehending the UX structure, reading from a database and transforming it into a usable view, and so on.

- In our grocery store example, we have search functionality; instead of having an always running server as in the traditional architecture, we are implementing a Faas function that responds to HTTPS requests through an API Gateway (We’ll discover about this later). Both server and client side functions read from the same database for product data. We can migrate the search code from the original groceries store server to the new groceries store search function without having to completely rewrite it because AWS Lambda supports Java and Javascript, our native implementation languages.

- Finally, we are using another Faas function to replace the purchase functionally. For security reasons, we must implement this functionality on the server rather than the client. This function communicates with the client using an API gateway.

When using FaaS, it is common practice to divide different logical requirements into separately deployed components.

In traditional architectures, the central server application manages all the flows, control, and security. There is no central arbiter of these concerns in the Serverless version. In contrast, we see a preference for choreography over orchestration, with each component playing a more architecturally conscious role—a concept that also exists in a microservices architecture.

This method produces systems that are “more adaptable and changeable.” The system is flexible as a whole or through independent updates to components; there are some cost benefits as well.

With its benefits, such a more servicable design there is also a trade off

- There are more moving parts to keep track of than in the previous monolithic application.

- It necessitates enhanced distributed monitoring, and we rely more heavily on the underlying platform’s security capabilities.

FaaS Characteristics — Understanding Faas

We’ve already discussed FaaS, but it’s time to delve into what it means.

- Using Faas, we run the back-end code without maintaining our server systems. When comparing FaaS to other modern architectural trends such as containers and PaaS, these independently operating server is the difference.

- Function-as-a-Service (FaaS) does not require us to code using a specific framework or library. We can simply code any application in a usual manner when it comes to language or environment. For example- you can implement the AWS Lambda function using any language that can compile down to Unix processes like Javascript, Python, Go, NET, etc.

- In FaaS, deployment is very different from the traditional systems. We can upload the code directly to the FaaS provider, and everything else like instantiating VMs, resource provisioning, and processes are all managed by the vendor.

- Horizontal scaling is all automatic and managed by the vendor elastically. If your application needs to run 100 parallel requests, then the cloud vendor will handle that without charging any additional fee on your behave. The compute containers executing your functions are ephemeral. The FaaS vendor creates and destroys them purely according to runtime needs.

- FaaS functions are usually triggered using even types defined by the providers. With AWS Lambda, these events include S3 (scheduled tasks), S3 (file/object) updates, and messages added to the message bus. (Kinesis)

- FaaS functions can be triggered as a response to inbound HTTP requests, or they can be invoked using an API gateway. For Example: In our grocery store, we used an API gateway to invoke our search and purchase function.

Stateless

Because the state is not persistent across multiple invocations, FaaS functions are frequently described as stateless. You do have storage available, but there is no guarantee that that state will remain consistent across multiple invocations; we should never assume that state from one innovation will be available to the next or any subsequent invocations.

Short Execution

Invocations in FaaS functions are time limited to how long they can last. An AWS Lambda function has a five-minute “timeout” before it is forced to stop responding to events. The limitations of Microsoft Azure and Google Cloud Functions are comparable. This means you cannot execute a task that needs to run longer than five minutes — you may need to create a chain of coordinated FaaS functions. However, in a traditional environment, you may have only one long-duration task performing both execution and coordination.

High Start up latency and frequent cold starts

Before each event, a FaaS platform must take some time to initialize an instance of a function. This startup latency can vary considerably depending on different factors. It can range from a few milliseconds to several seconds.

The language used, the number of libraries used, the amount of code, the setting of the Lambda function environment itself, if you need to connect to VPC resources, and so on all impact cold-start latency. Because many of these elements are under the developer’s control, reducing the startup latency associated with a cold start is generally possible.

API Gateway

We have discussed API Gateways earlier. An API gateway is an HTTP server with configured endpoints and routes, and each route is linked directly with a resource to handle that route. Such handlers are commonly made available as FaaS functions in a Serverless architecture.

When an API gateway receives a request, it searches for the routing configuration that matches it. If the route is FaaS-backed, it invokes the corresponding FaaS function with the initial request acknowledged. The API gateway usually allows routing from HTTP request variables to a more precise input for the FaaS function. The API gateway will take the output of the FaaS function’s logic execution and convert it into an HTTP response before returning it to the caller of the original request.

What cannot be called Serverless?

So far in this blog, we have discussed serverless, combining two technologies: Function-as-a-Service and Back-end-as-a-Service.

Before we understand the pros and cons of serverless, let’s discuss what isn’t serverless.

Comparing Faas with PaaS (Platform-as-a-Service)

There are many similarities between Function-as-a-Service and Platform-as-a-Service, but there’s a significant difference in how they operate. In FaaS, scaling is not an issue as with each request, scaling is independent and automatic. With PaaS, we still need to think about how to scale. We won’t be able to manage resources on the individual request level even if we keep the scaling automatic.

Comparing Faas with Logical Containers

One of the key benefits of using Function-as-a-service (FaaS) is that it eliminates the need to manage processes at the operating-system level. Containers are another popular method of process abstraction, with Docker being the most visible example. As I discussed earlier in PaaS, scaling for FaaS is automatically managed, fine-graned, and transparent, and this is linked to automatic resource allocation and provisioning. Traditionally, container platforms require you to supervise the size and shape of your clusters.

Benefits of FaaS

- Reduced operational cost — FaaS gives you cost saving by reducing your capital expenditure on building the on-premises infrastructure. Your system works completely on a shared infrastructure. The second benefit comes in the form of reduced labor costs that you need to operate and maintain your infrastructure.

- Reduced development costs — Serverless Backend as a Service, on the other hand, is the result of the commercialization of complete application components. An example of this is authentication; traditionally, we have to develop our authentication functionality using features like login, signup, password, and integration with other authentication providers. With serverless, we can directly integrate ready-built authentication functionality without needing to develop it ourselves. An example of this service is Auth0.

- Reduced scaling costs —There are various advantages to this, but the most important is that you only pay for the computation that you want, down to a 100ms threshold in the case of AWS Lambda. Depending on the amount and form of your traffic, this could be a major economic victory for you.

- Reduced deployment and packaging complexity — When opposed to installing a whole server, packaging and deploying a FaaS function is trivial. Simply put all of your code into a zip file and upload it. No need to decide whether to run one or many containers on a computer. If you’re just getting started, you might not even need to package anything — you might be able to write your code directly in the FaaS provider console.

- Continuous experimentation and reduced time to market — We want to constantly explore new things and swiftly upgrade our existing systems as our teams and products become more directed toward lean and agile processes. A good new-idea-to-initial-deployment capacity enables us to run fresh experiments with the least amount of effort and expense. In contrast, simple redeployment in continuous delivery enables quick iteration of stable projects.

Drawbacks OF FAAS

Some of these trade-offs are inherent in the notions; you cannot solve them by advancement and must constantly be acknowledged. Others are connected to existing implementations; we should expect to see these fixed over time.

- Vendor control — Any outsourcing plan involves handing over control of a portion of your system to a third-party vendor. This lack of control might appear in system downtime, unanticipated limits, cost changes, functionality losses, forced API modifications, and other issues.

- Vendor lock-in — Whatever Serverless features you use from one vendor are quite likely to be done differently by another vendor. You may need to change your operational tools and change your code & architecture for switching to another serverless service provider.

- Security concerns — Each Serverless provider you utilize expands the number of distinct security implementations supported by your ecosystem. This expands your permeability for malicious intent and elevates the probability of cyber-attacks.

- Startup latency — Serverless providers have made progress in this area. However, there are still substantial problems, particularly for operations that are only sporadically activated and/or require access to VPC resources. We can expect this segment to improve further.

- Execution duration — We have already discussed how FaaS functions are short-lived. AWS Lambda functions are aborted if they run longer than five minutes. This has been steady for some years, and AWS shows no signs of changing.

Conclusion

Serverless use has been significantly increasing in recent years. According to Datadog, AWS Lambda Functions are triggered 3.5X more frequently per day than in 2019. At least one in every five enterprises uses AWS, Azure, or Google FaaS solutions. With this in mind, if your organization is considering a serverless transition, please contact our team at AnAr Solutions. Our professionals will assist you in getting started on your serverless journey.